...

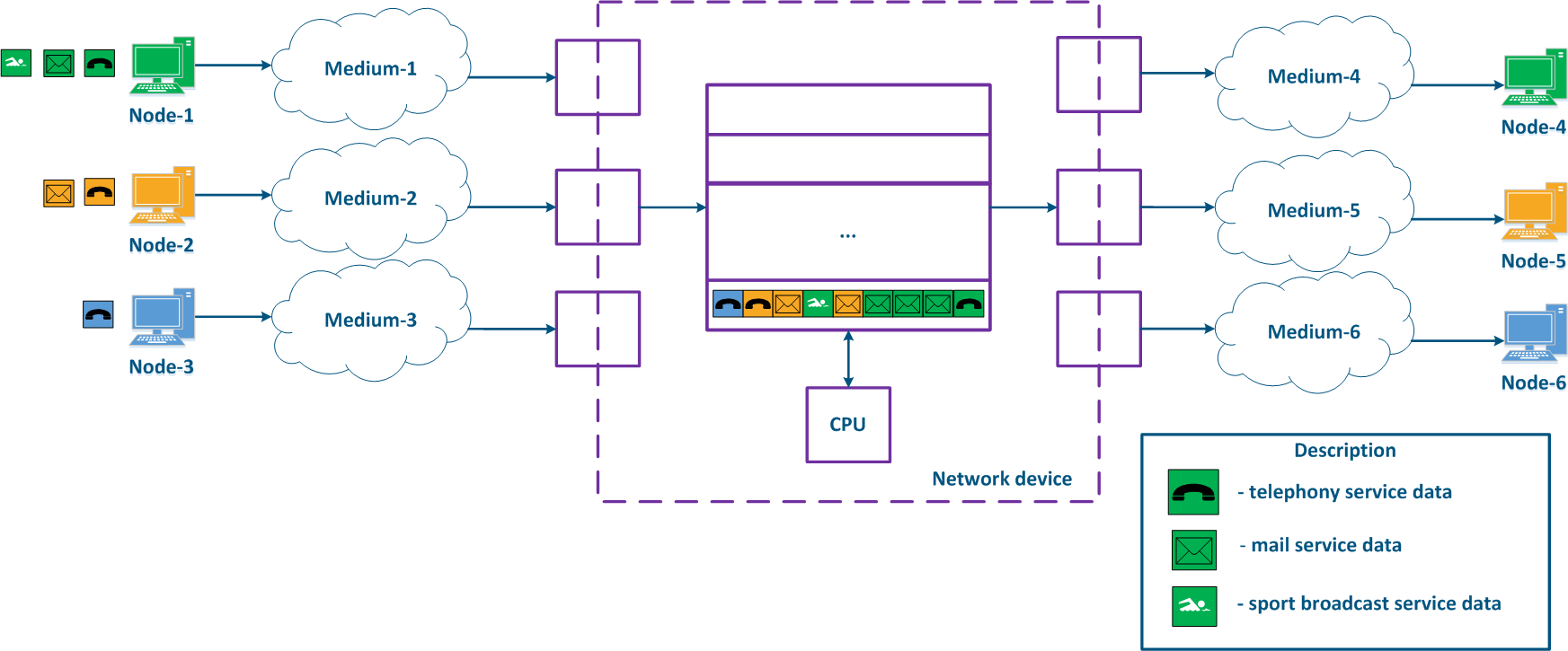

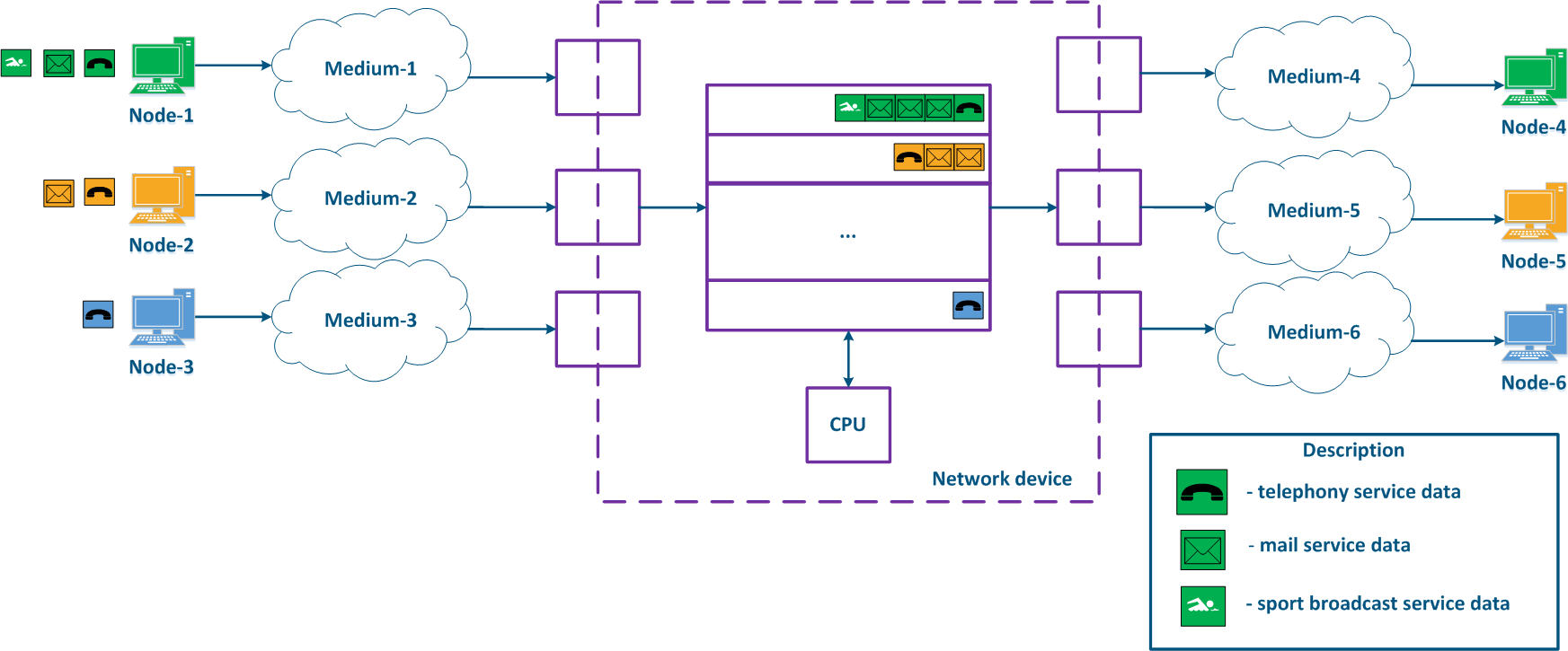

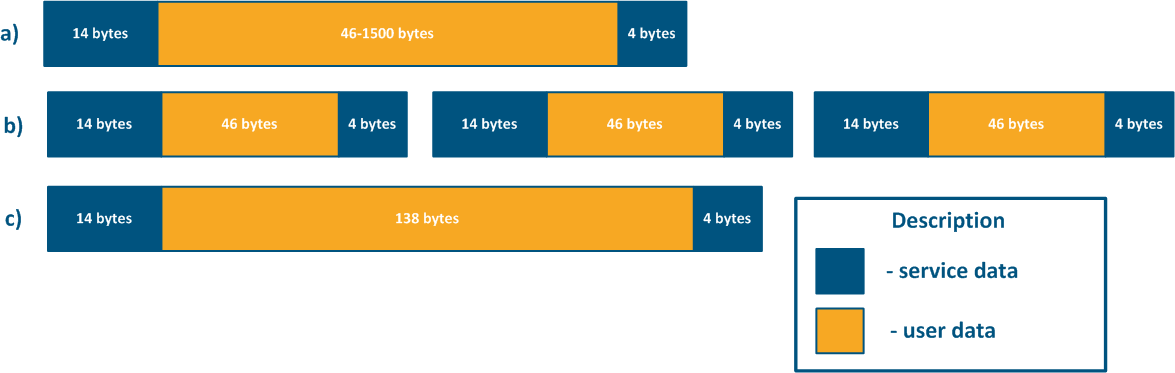

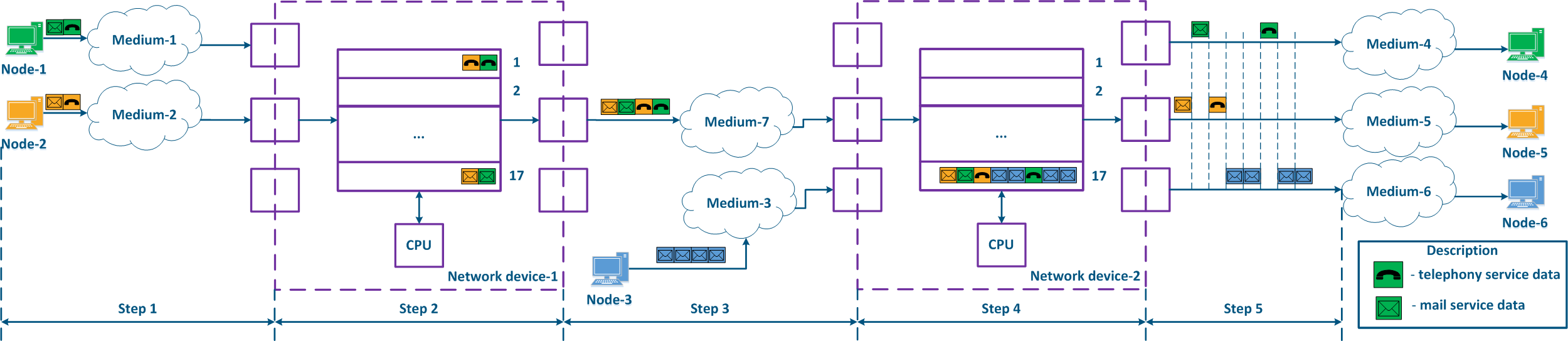

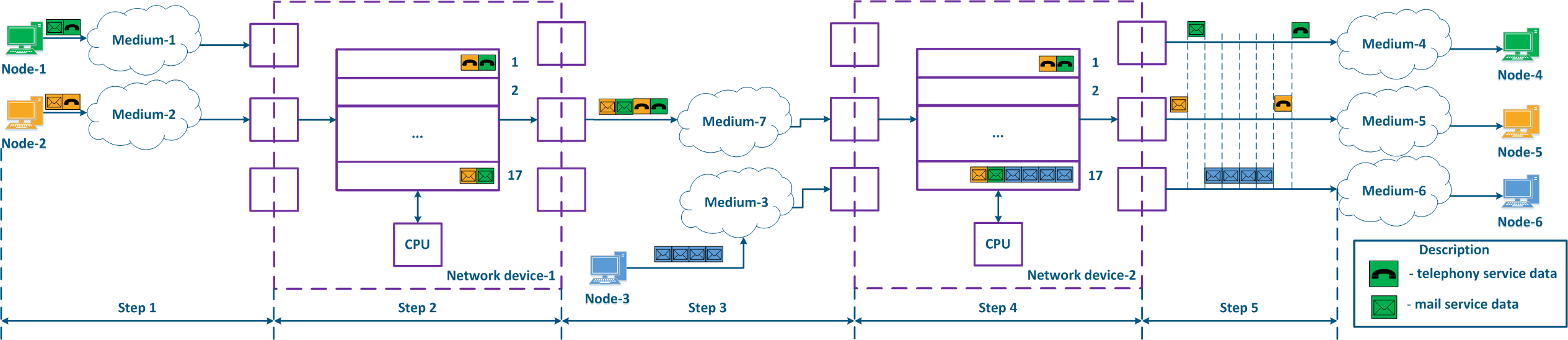

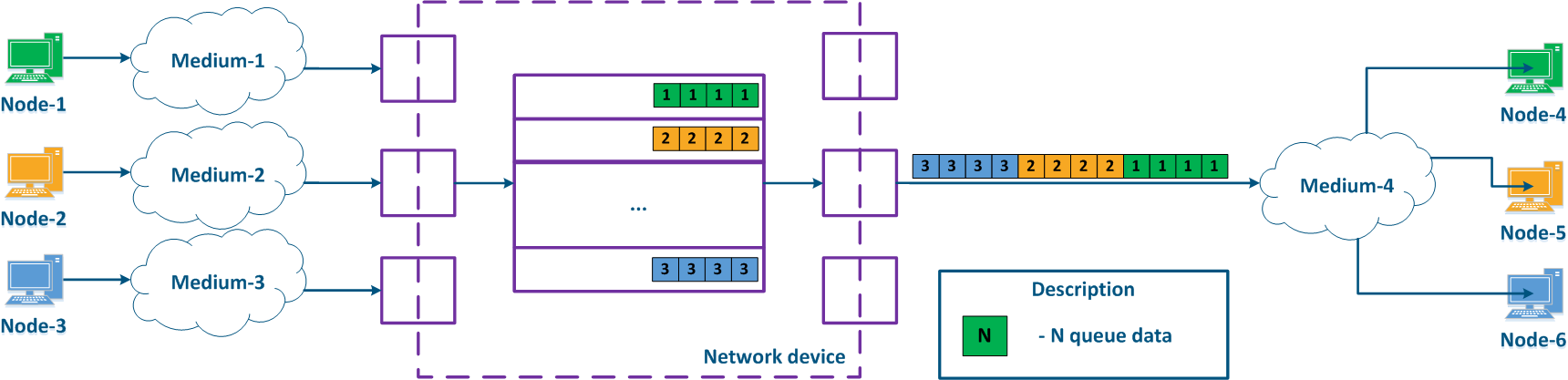

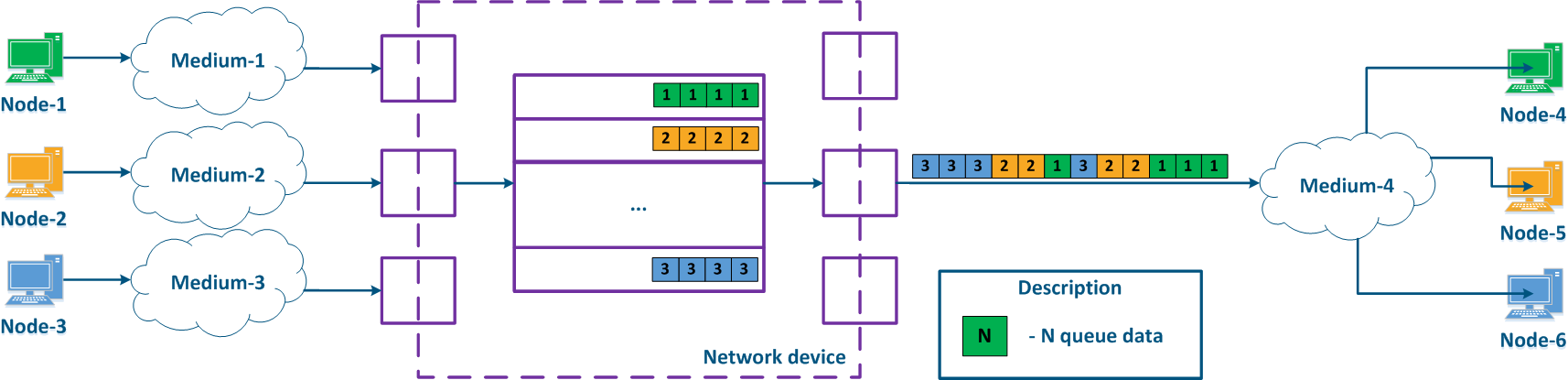

With QoS configured, each of the incoming traffic flows can be classified by its type (for example) and a separate queue can be mapped to each class (Figure 2b). Each packet queue can be assigned a priority, which will be taken into account while extracting the packets from the queues, and will guarantee specific quality indicators. The traffic flow classification can be performed not only with respect to the services used, but according to other criteria also. For example, each pair of nodes can be assigned to a separate packet queue (Figure 2c).

| Center |

|---|

Figure 2a - Queuing for various services without QoS Figure 2b - Queuing for various services with QoS Figure 2b - Queuing for various users with QoS |

...

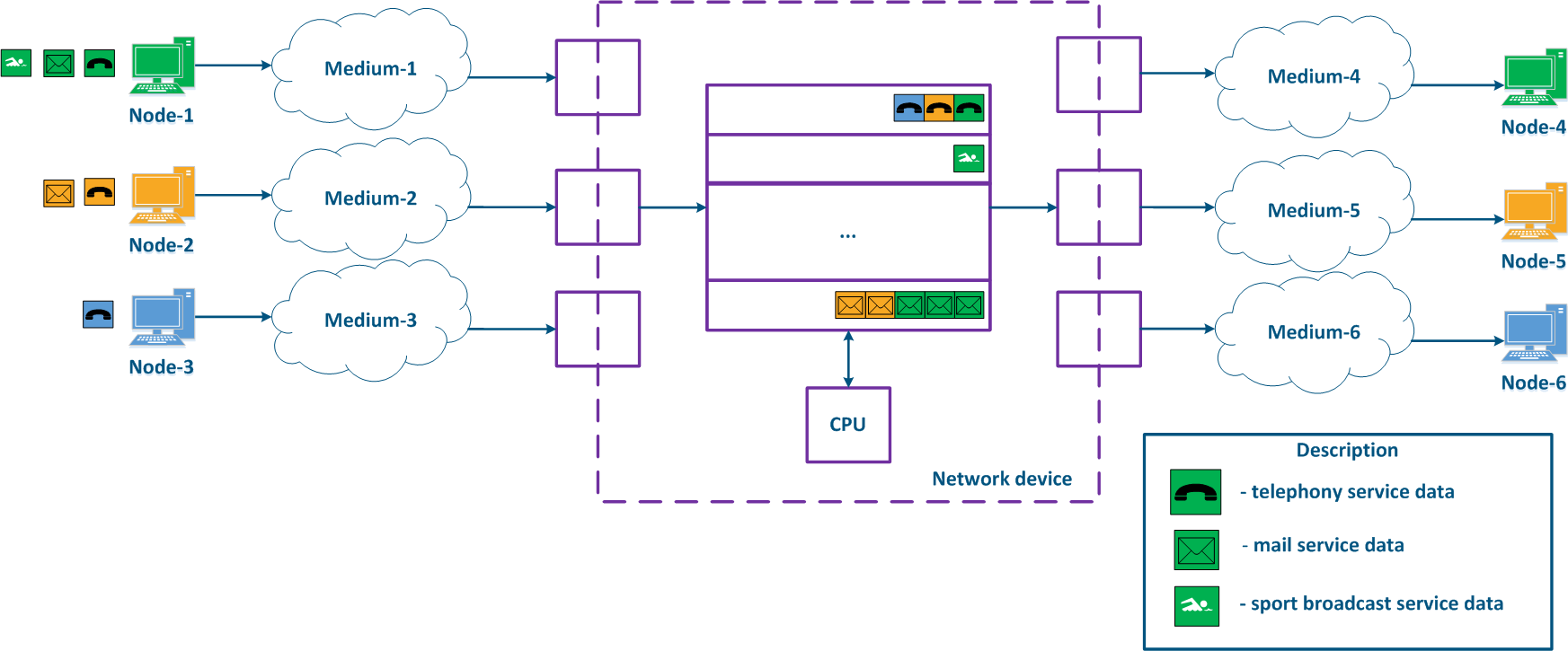

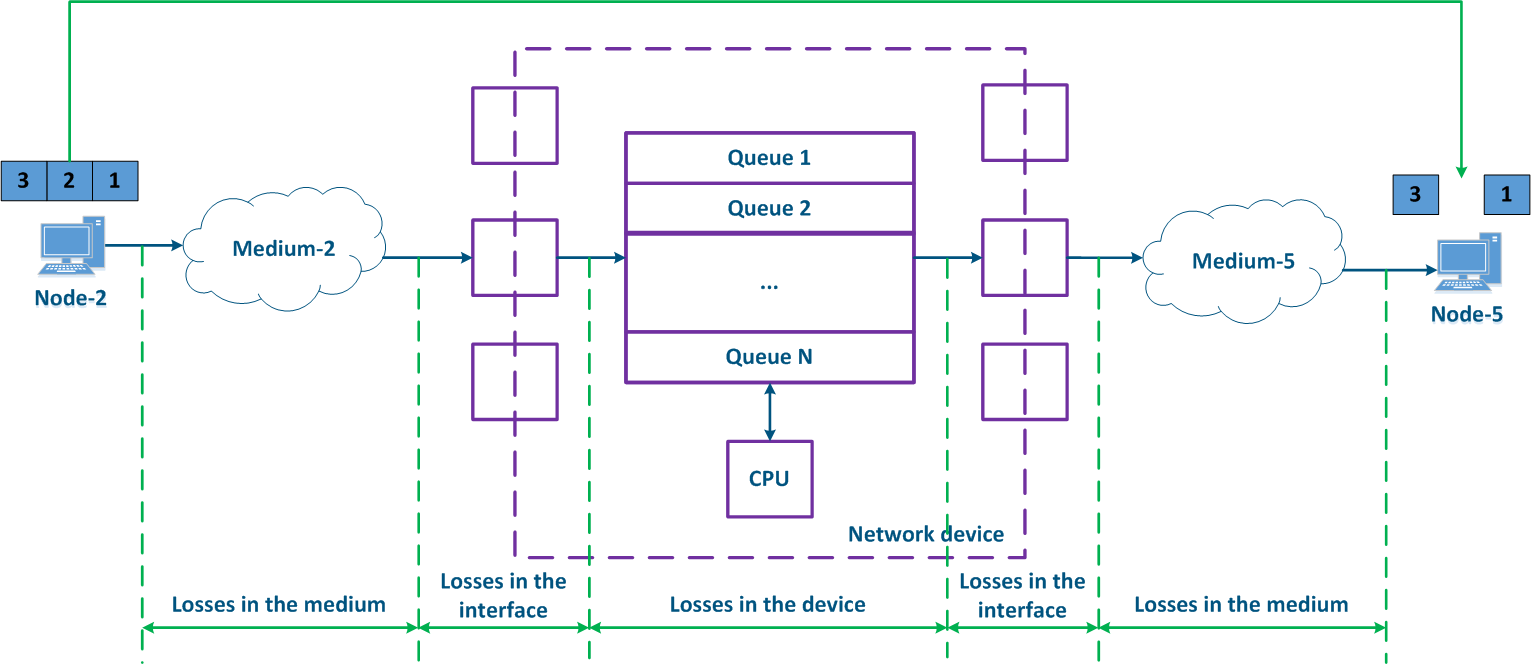

- Losses in the medium: losses related with the propagation of the signal in the physical environment. For example, the frame will be lost if the useful signal level is lower than the receiver sensitivity. Losses can also be caused by the physical damage of the interfaces connected to the media or by impulse pickups resulting from poor grounding.

- Losses on the interface: losses while processing a queue at the incoming or at the outgoing interface. Each interface has a memory buffer, which can be completely filled in case of intensive data stream transmissions. In this case, all the subsequent data entering the interface will be discarded, because it cannot be buffered.

- Losses inside the device: Data discarded by the network device according to the logic of the configuration. If the queues are full and the incoming data cannot be added to the processing queue, the network device will drop it. Also, these losses include the data packets rejected by access lists and by the firewall.

| Center |

|---|

Figure 3 - Data packet loss example |

...

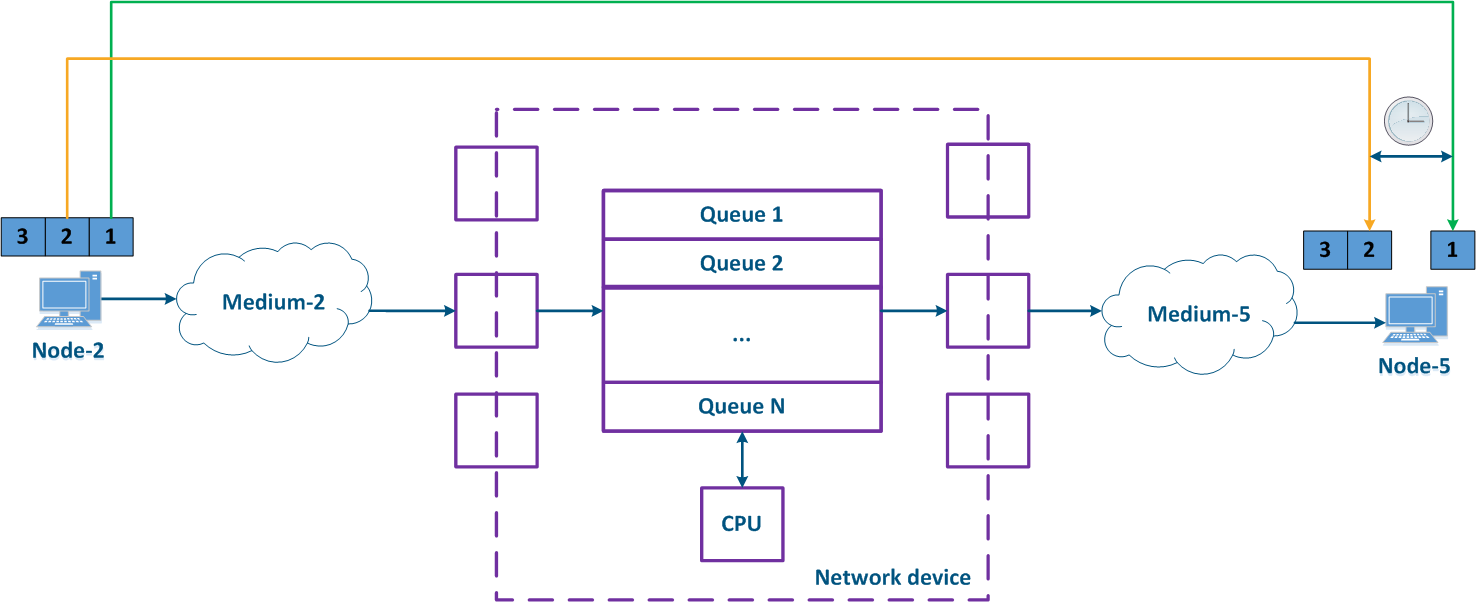

For example, the service header length for 64 bytes long frames (Figure 4b) and 156 bytes frames(Figure 4c) will be the same, but the user data amount will be different. To transmit 138 bytes of user data, three 64 bytes frames or one 156 bytes frame will be required, so in the first case 192 bytes are sent, in the second only 156 bytes. For a link having a fixed throughput, large frames will increase the efficiency by rising the useful throughput of the system, but the latency will also increase. The performance of the Infinet devices in various conditions is shown in the "Performance of the InfiNet Wireless devices" document.

| Center |

|---|

Figure 4 - Frame structure for various Ethernet frame lengths |

...

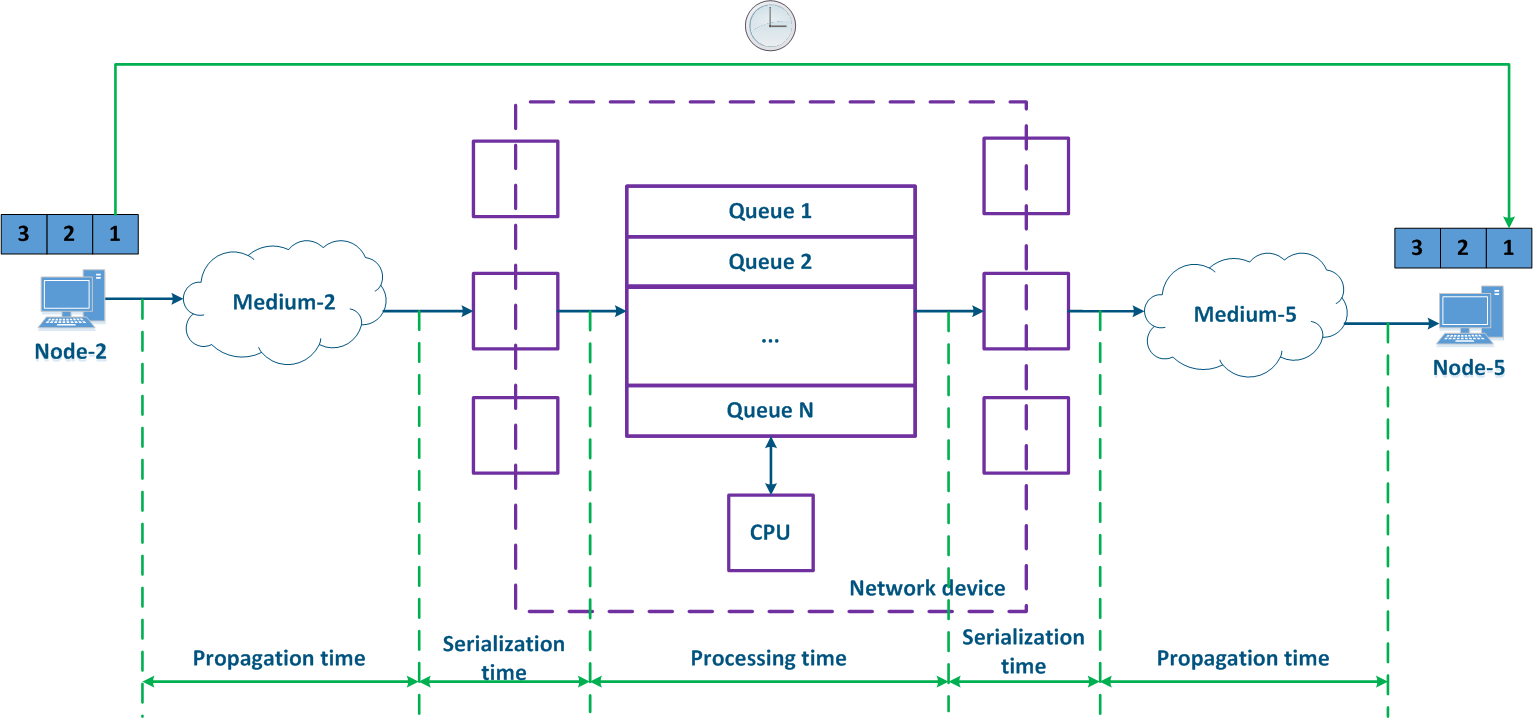

The delay is often measured, as a round-trip time (RTT), i.e. the time it takes for the data packet to be transmitted from the source to the destination and backward. For example, this value can be seen in the ping command's results. The time it takes for the intermediate network devices to process the data packets forward and backward may differ, therefore, usually the round-trip time is not equal to the double of the one-way delay.

| Center |

|---|

Figure 5 - Example of data transfer delay |

...

The CPU load and the status of the packet queues are frequently changing at the intermediate network devices, so the delay during the data packet transmission will vary. In the example below (Figure 6), the transmission time for the packets with the identifiers 1 and 2 is different. The difference between the maximum and the average delay values is called jitter.

| Center |

|---|

Figure 6 - Example of varying delay in data transfer |

...

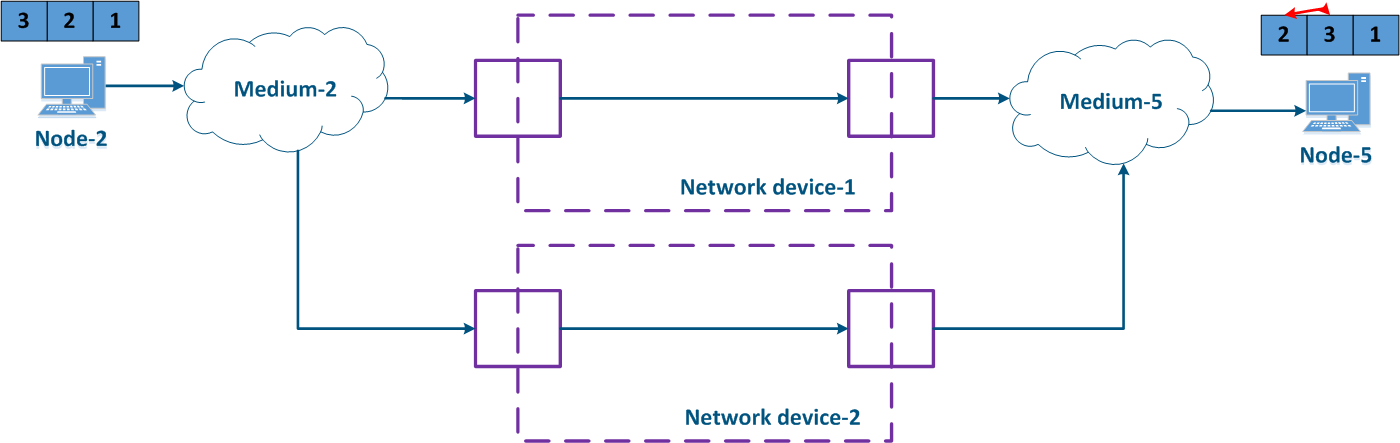

The effect depends on the characteristics of the service and on the ability of the higher layer network protocols to restore the original sequence. Usually, if the traffic of different services is transmitted through different paths, then it should not affect the ordering of the received data.

| Center |

|---|

Figure 7 - Example of unordered data delivery |

...

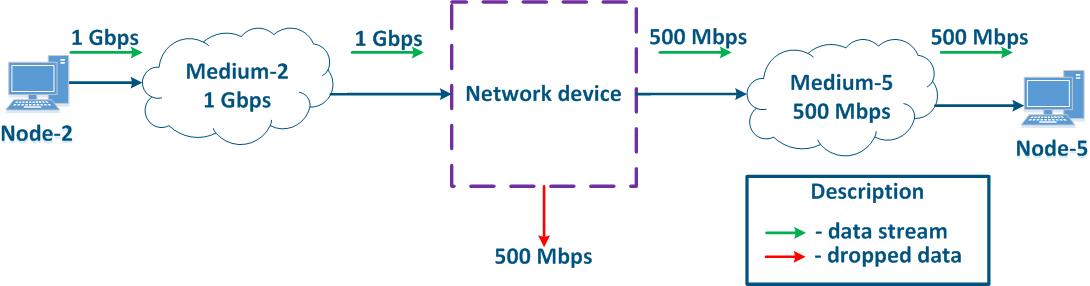

Let's look at the example below (Figure 8). Node-2 generates traffic serving different services with a total speed of 1 Gbit/s. Medium-2 allows to transfer this data stream to an intermediate network device, however, the maximum link throughput between the Network device and Node-5 is 500 Mbps. Obviously, the data stream cannot be processed completely and part of this stream must be dropped. The QoS task is to make these drops manageable in order to provide the required metric values for the end services. Of course, it is impossible to provide the required performance for all the services, as the throughput does not match, therefore, the QoS policy implementation involves that the traffic of the the critical services should be processed first.

| Center |

|---|

Figure 8 - Example of inconsistency between the incoming traffic amount and the link throughput |

...

Keep in mind that implementing QoS policies is the only method to ensure the quality metrics. For an optimal effect, the QoS configuration should be synchronized with other settings. For example, using the TDMA technology instead of Polling on the InfiLINK 2x2 and InfiMAN 2x2 families of devices reduces jitter by stabilizing the value of the delay (see TDMA and Polling: Application features).

| Center |

|---|

Figure 9a - Example of data distribution with partly implemented QoS policies Figure 9b - Example of data distribution with implemented QoS policies |

...

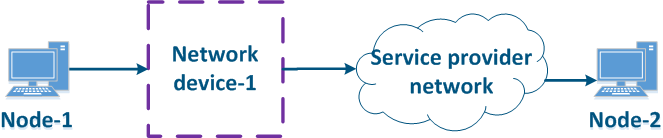

- White-box: all the network devices along the data propagation path are in the same responsibility zone. In this case, the QoS configuration on the devices can be synchronized, according to the requirements specified in the section above.

- Black-box: some network devices in the data propagation path are part of an external responsibility zone. The classification rules for incoming data and the algorithm for emptying the queues are configured individually on each device. The architecture of the packet queues's implementation depends on the manufacturer of the equipment, therefore there is no guarantee of a correct QoS configuration on the devices in the external responsibility zone, and as a result, there is no guarantee of the high-quality performance indicators.

| Center |

|---|

Figure 10a - White-box structure example Figure 10b - Black-box structure example |

...

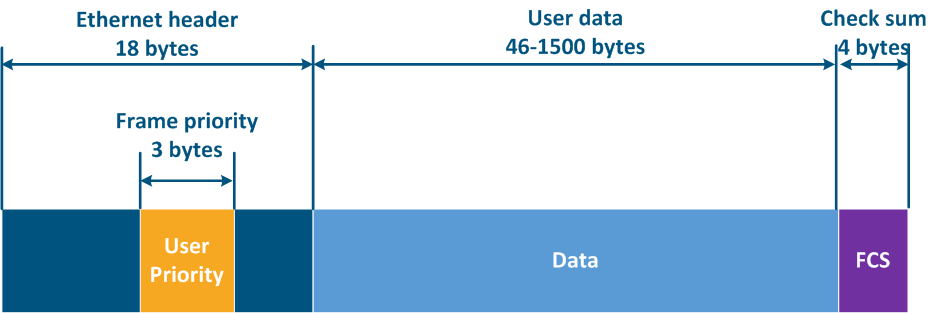

The Ethernet frame header includes the "User Priority" service field, which is used to prioritize the data frames. The field has a size of 3 bits, which allows to select 8 traffic classes: 0 - the lowest priority class, 7 - the highest priority class. Keep in mind that the "User Priority" field is present only in 802.1q frames, i.e. frames using VLAN tagging.

| Center |

|---|

Figure 11 - Frame prioritization service field in the Ethernet header |

...

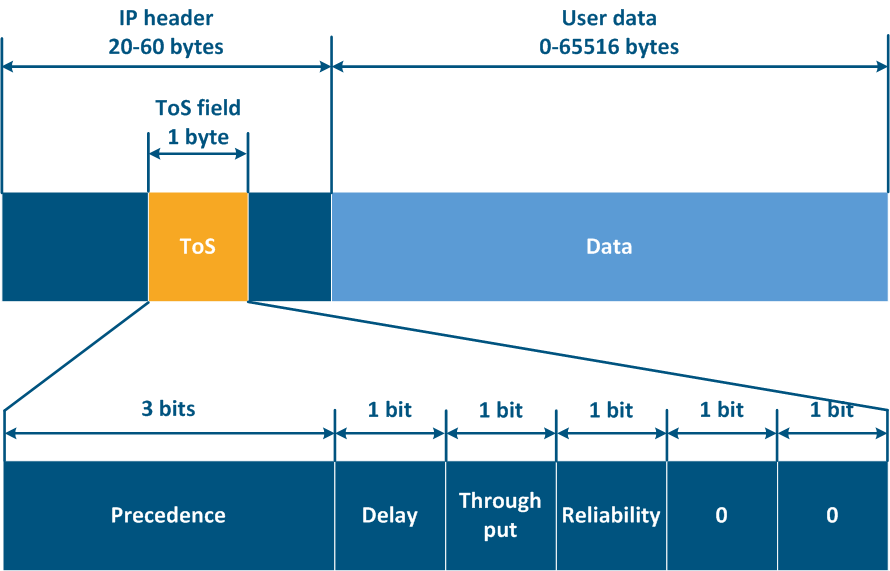

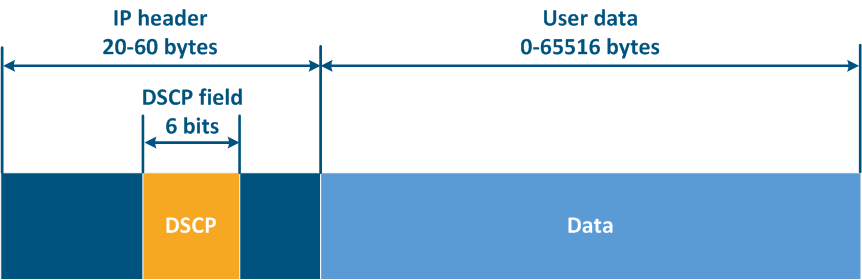

Thus, ToS allows to distinguish 8 traffic classes: 0 - the lowest priority, 7 - the highest priority, and DSCP - 64 classes: 0 - the lowest priority, 63 - the highest priority.

| Center |

|---|

Figure 12a - ToS service field in the IP packet header Figure 12b - DSCP service field in the IP packet header |

...

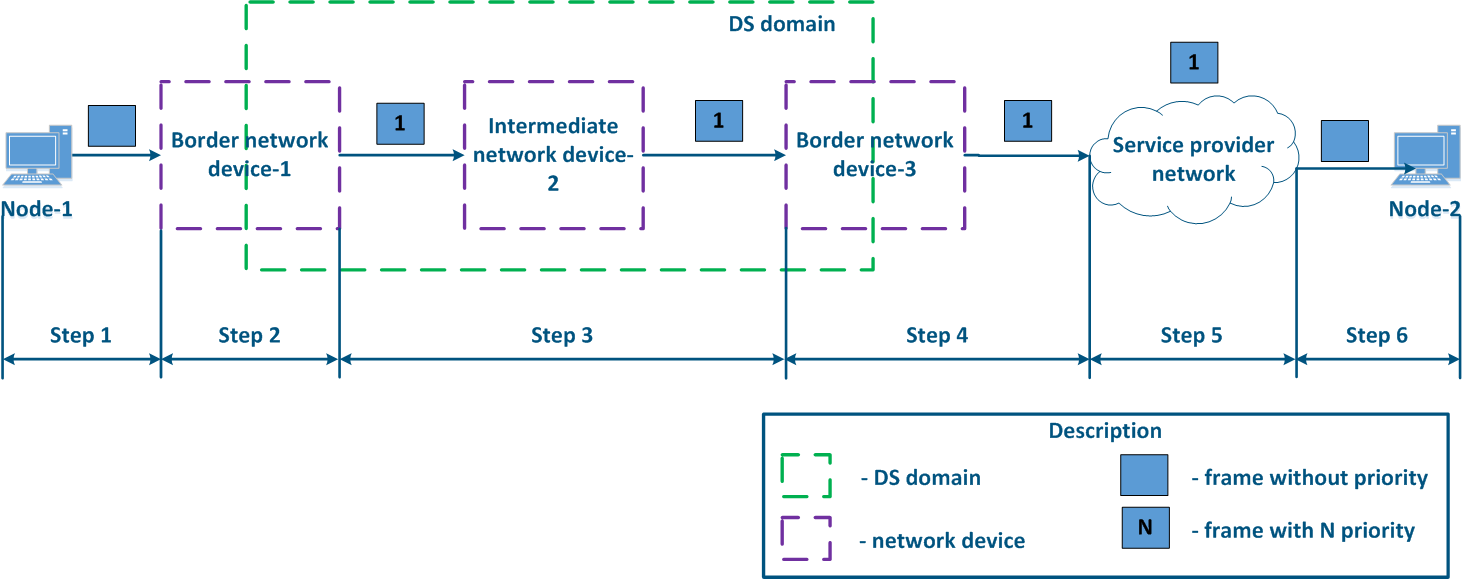

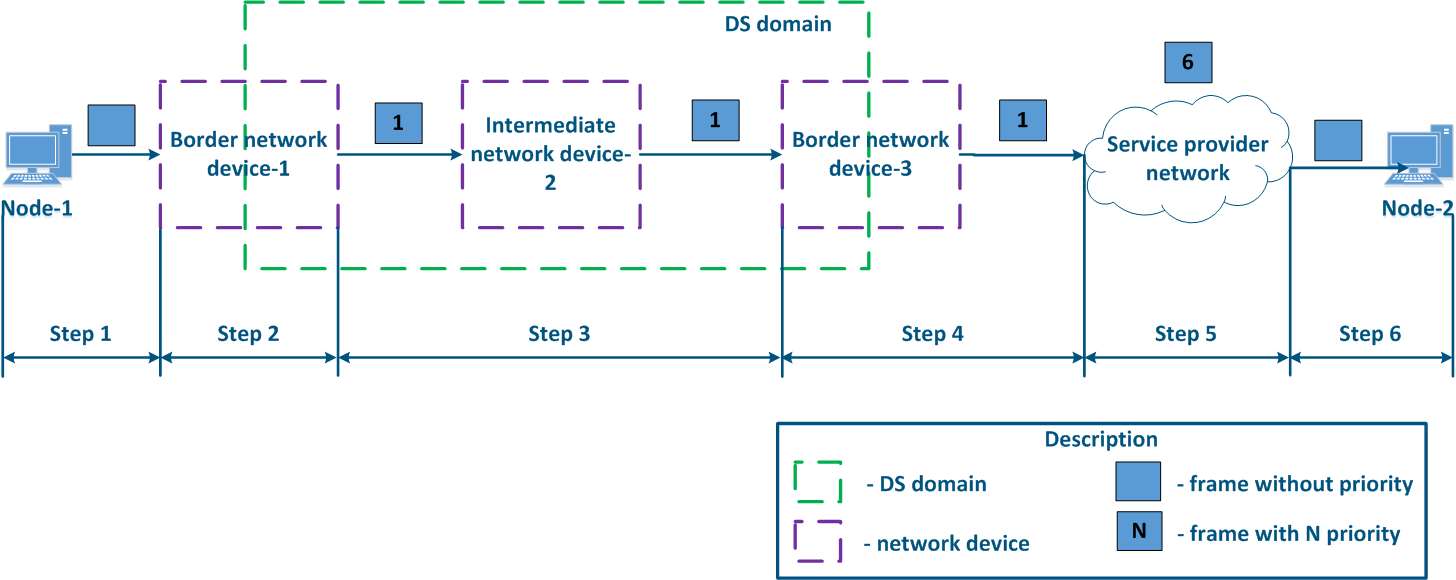

- Step 1: Node-1 generates an Ethernet frame for Node-2. There is no field present for frame priority tagging in the header (Figure 13a).

- Step 2: Border Network Device-1 changes the Ethernet header, setting the priority to 1. Border devices should have rules configured in order to filter the traffic of Node-1 from the general stream and to assign a priority for it. In networks with a large traffic flow number, the list of rules on border devices can be volumetric. Border network device-1 processes the frame according to the set priority, placing it in the corresponding queue. The frame is transmitted towards the outgoing interface and sent to Intermediate network device-2 (Figure 13a).

- Step 3: Intermediate network device-2 receives the Ethernet frame having priority 1, and places it in the corresponding priority queue. The device does not handle the priority in terms of changing or removing it inside the frame header. The frame is next transmitted towards the Border network device-3 (Figure 13a).

- Step 4: Border network device-3 processes the incoming frame similarly to the Intermediate device-2 (see Step 3) and forwards it towards the service network provider(Figure 13a).

- Step 4a: in case of agreeing that the traffic will be transmitted through the provider's network with a priority other than 1, then Border Device-3 must change the priority. In this example, the device changes the priority value from 1 to 6 (Figure 13b).

- Step 5: during the transmission of the frame through the provider's network, the devices will take into account the priority value in the Ethernet header (Figure 13a).

- Step 5a: similarly to Step 4a (Figure 13b).

- Step 5b: if there is no agreement about the frame prioritization according to the priority value specified in the Ethernet header, a third-party service provider can apply its own QoS policy and set a priority that may not satisfy the QoS policy of the DS domain (Figure 13c).

- Step 6: the border device in the provider's network removes the priority field from the Ethernet header and forwards it to Node-2 (Figure 13a-c).

| Center |

|---|

Figure 13a - Example of Ethernet frame priority changing during the transmission through two network segments (the priority settingis coordinated and the priority value matches for the 2 segments) Figure 13b - Example of Ethernet frame priority changing during the transmission through two network segments (the priority settingis coordinated, but the priority should be changed) Figure 13c - Example of Ethernet frame priority changing during the transmission through two network segments (the priority setting in the 2 segments is not coordinated) |

...

The disadvantage of this mechanism is that resources will not be allocated to low-priority traffic if there are packets in the higher priority queues, leading to the complete inaccessibility of some network services.

| Center |

|---|

Figure 14 - Strict scheduling |

...

When using the weighted scheduling, each queue will receive resources, i.e. there will be no such situation with complete inaccessibility of a network service.

| Center |

|---|

Figure 15 - Weighted scheduling |

...

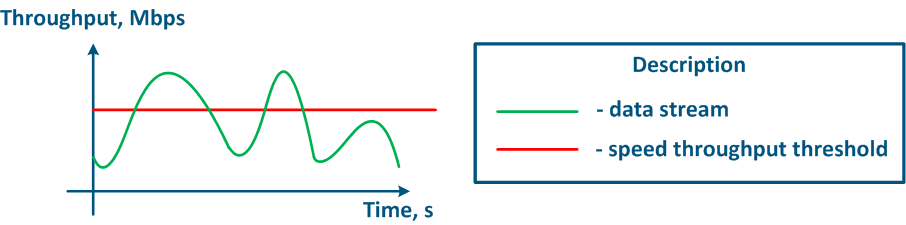

The throughput limitation principle is to constantly measure the throughput of the data stream and to apply restrictions if the this value exceeds the set threshold (Figure 16a,b). The throughput limitation in Infinet devices is performed according to the Token Bucket algorithm, where all data packets above the throughput threshold are discarded. As a result, there will appear losses, as described above.

| Center |

|---|

Figure 16a - Unlimited data flow rate Figure 16b - Limited data flow rate |

...

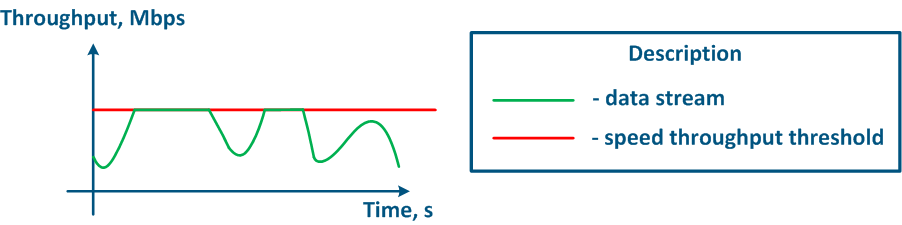

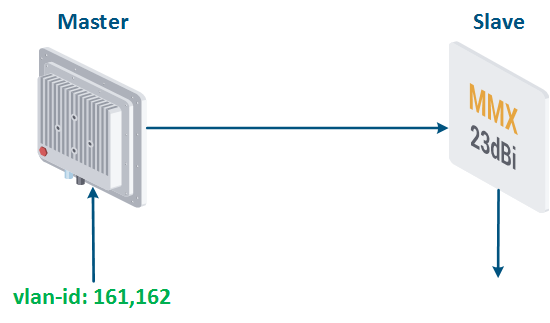

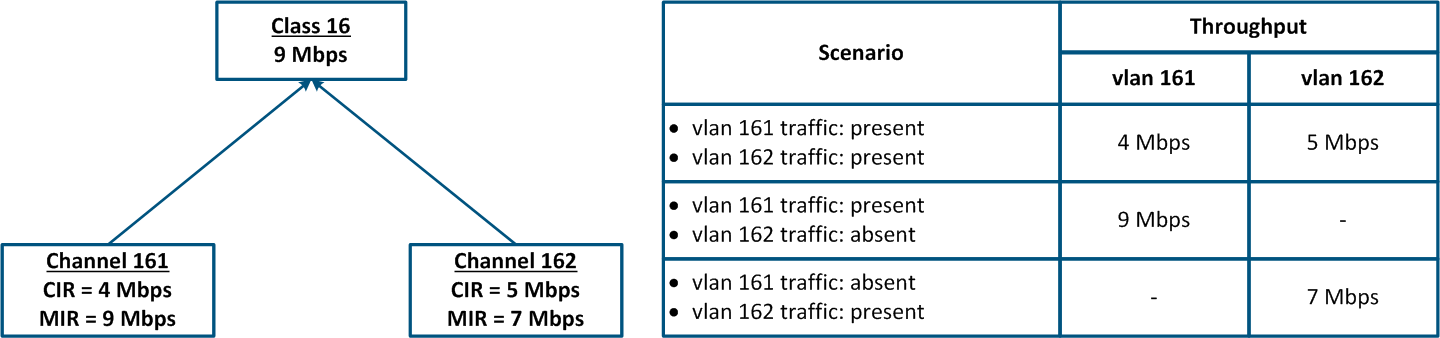

- Class 16 has been configured with a 9 Mbps throughput.

- Class 16 is the parent of the channels 161 and 162, i.e. the total traffic at these logical channels is limited to 9 Mbps.

- The traffic with vlan ID 16 is associated with the logical channel 161; the traffic of vlan 162 is associated with the logical channel 162.

- The CIR value for channel 161 is 4 Mbps and for channel 162 it is 5 Mbps. If both services will actively exchange data, the threshold values for their traffic will be equal to the CIR of each channel.

- The MIR value for channel 161 is 9 Mbps and for channel 162 it is 7 Mbps. If there is no traffic in logical channel 162, then the threshold value for channel 161 will be equal to the MIR, i.e. 9 Mbps. In the other case, when there is no traffic in the logical channel 161, the threshold value for channel 162 will be equal to 7 Mbps.

| Center |

|---|

Figure 17a - Throughput limitation for 2 traffic flows tagged with vlan-ids 161 and 162 Figure 17b - Hierarchical channel structure of the throughput limits for the traffic of vlans 161 and 162 |

...